AI 重塑图像生成:观黑夜如白昼,洞微处若观火

即使在夜间环境下,仍能以较低的成本提供实时彩色清晰的图像,或许将来 ISP 都不会存在。

「色彩就是欢乐(Color is joy)。」20 世纪最有名、也最具影响力的摄影大师 Ernst Haas 曾说。在 20 世纪 60 年代,「最严肃」的摄影师只愿看到黑白世界,而这位彩色成像技术的先驱率先使用柯达胶片,表达出色彩不可否认的力量。

同一时期,法国火山学家 Katia 和 Maurice Krafft 夫妇使用 16 毫米拍摄镜头和尼康 F2 胶片相机记录下惊心动魄的活火山运动,喷涌而出的橘红色岩浆犹如地球心脏跳动时流动的血液。

光学成像技术的发展大大扩展了人类的视觉和表达能力,但很难让人类走出彩色的困境,我们不可能像在白天一样自由地看到黑夜的一切,尽管大多数魔法都发生在夜晚。

一、人眼、ISP 与数字成像

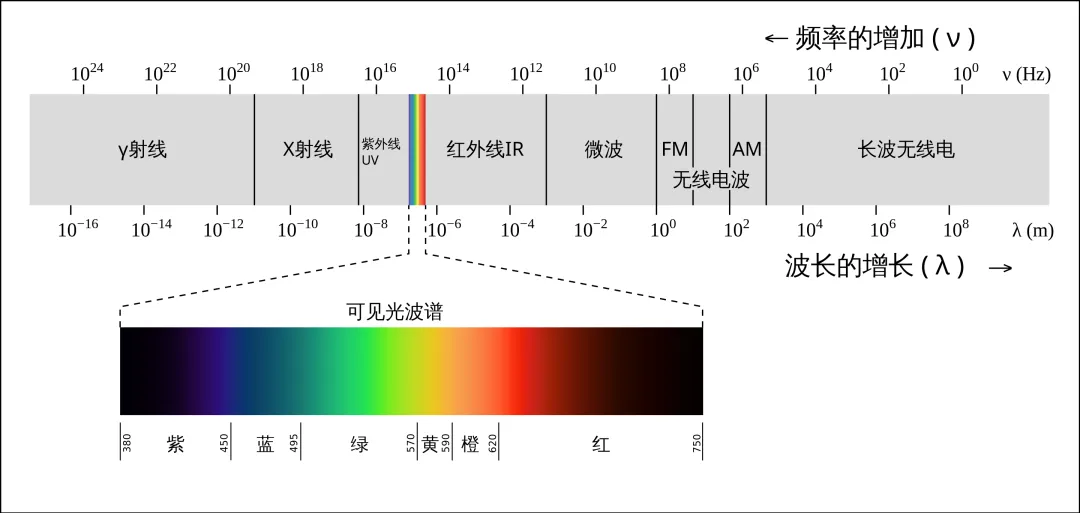

光学成像技术的灵感源自对人类视觉活动的观察。当进入眼睛的光子撞击每只眼睛后部视网膜中 125 亿个光敏神经细胞中的一个或多个时,视觉处理就开始了:

人类约有1.3 亿视杆细胞,这种杆状细胞使用视紫红质来接收微弱的光线,帮助我们感知特定光强度的亮度变化,也主导了我们在晨昏弱光下和夜间的视觉功能。

可见光波长范围 380-790 纳米,也是唯一有色彩信息的波长范围。

相较于势力庞大的视杆细胞,人类仅有 7 百万左右的视锥细胞。视锥细胞依靠相关的感光色素来辨别颜色,只有在光线充足的时候它才能正常工作。

如果是在特别暗的环境里,视锥细胞会停止工作,无法区分不同波长的光,我们只能看到灰暗的场景。

两种细胞在视网膜的分布不同,功能不同。视杆细胞(蓝色)主要是感知明暗,视锥细胞(红色)感受不同的颜色。

我们身边常见的手机、监控、安防等设备的摄像头也都是可见光摄像头,它们和胶片成像最大区别之一就是感光媒介从胶片变成图像传感器(比如常见的 CMOS),一种金属氧化物,负责将光信号转变成电信号。

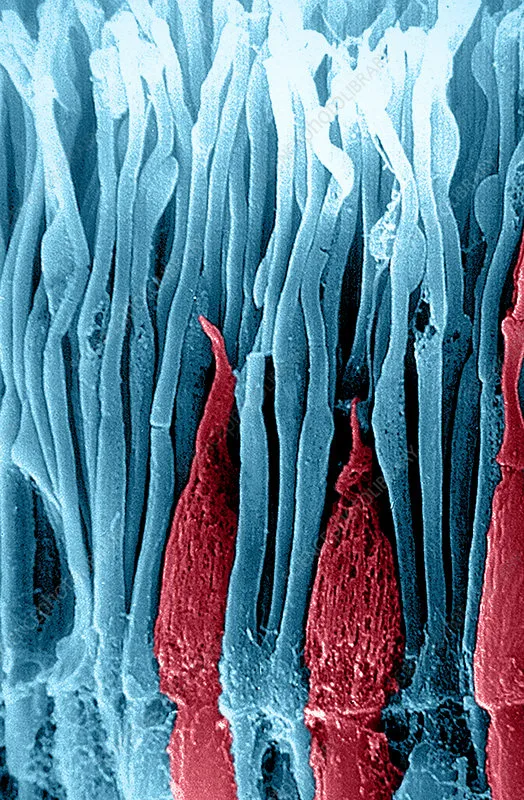

CMOS 上面按规则布满了微型的金属元件,它们就像一个个忠于职守的小小记录员,记录对应位置的亮度信息,叫做像素。

CMOS 就像杆状细胞,只能感受光的强弱,无法感受光的波长(等于无法记录颜色)。科学家后来在图像传感器之前添置了一个滤光层,透过 CMOS 上接收到的过滤结果(RAW 图),依靠专门的算法计算出每个像素的颜色。

这时就轮到 ISP(Image Signal Processor,图像信号处理器)出场了。它专门负责对前端图像传感器输出的电压、电流信号做后期处理,力求较好还原现场细节,让人看懂图像。

数字摄像头的成像过程。ISP 处在最后把关的阶段,利用图像算法对从图像传感器接收到的光电信号进行后期处理。

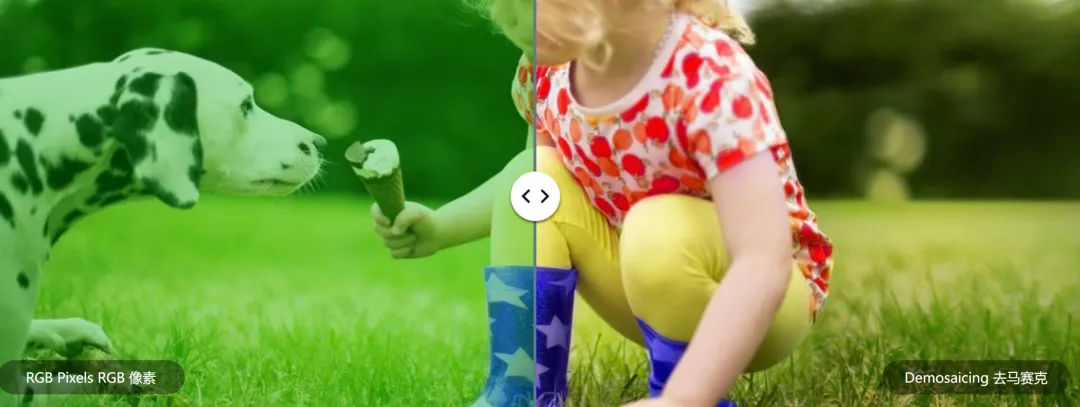

事实上,这些后期处理都是基于图像算法实现的。比如,推测出像素颜色的算法就是「去马赛克(demosaicing)」

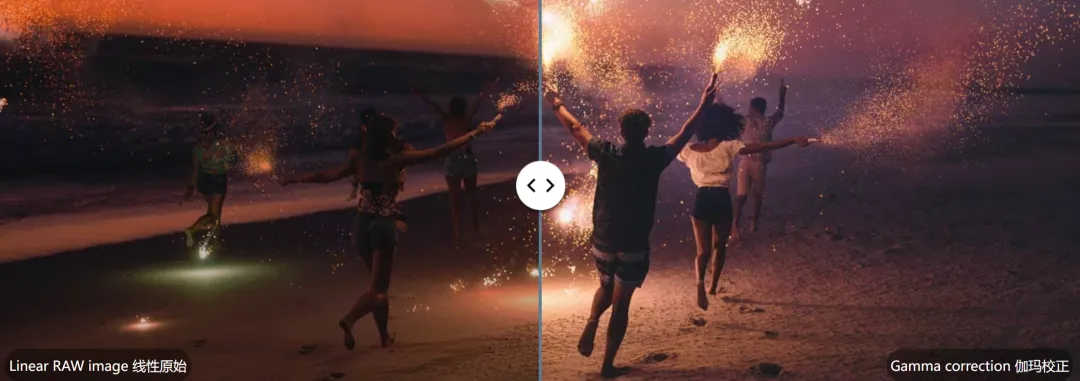

现在几乎所有的设备都会默认自动执行「线性校正」,就是通过 ISP 给机器较为偏暗的成像来一个线性变换,以抵消过于暗淡的不良输出,让最后得到的结果与肉眼实际看到的一致。

在弱光条件下,图像传感器很难接收到足够的光信息。因此需要较高的 ISO(感光度) 或较慢的快门速度,增加感光芯片接收到的光子量,但这往往容易导致发热问题,产生噪点图像。ISP 具有先进的降噪算法,可减少各种颜色或图案噪声,同时保留纹理细节。

白平衡,是力求在各种复杂场景下都能精确还原物体本来的颜色,哪怕你是在白炽灯下拍摄了一张白纸。自动曝光控制,则是透过分析来自传感器的亮度信息,计算和控制光圈、快门速度和 ISO ,使得图像亮度适宜。

因此,ISP 技术很大程度上决定数字相机的成像质量,被称为摄像设备的 「大脑」。

二、 ISP 与传统夜视方案的困境

不过,可见光这位魔术大师也给 ISP 出了不少难解的题。 白天,如果光线过强或者反差太大,比如逆光或者是车辆从隧道里出来突然面对强光时的感知,人眼很难解决,摄像头也不行。

随着太阳被地平线吞没,在极弱光下,传统 ISP 几乎什么都看不到。

按照军工标准,满月大概是 0.1 Lux(单位面积的光通量),接着是 1/4 月,大概是 0.01Lux。 没有月亮,天上只有星星的话,大概是 0.001Lux,我们把这种星光级别(的照度)就定义为极弱光。深知未来 CEO 张齐宁解释道。

每当夜晚来临,深圳城市公园湖泊等灯光覆盖不好的地方,基本上属于极弱光。小区里的车位停车,因为路灯昏暗,倒车很不方便,也是弱光或极弱光的场景。

自 2017 年底成立以来,深知未来就致力于用自研 AI ISP 技术突破极弱光场景(低照度、逆光、背光等复杂光线及雨雪雾环境)条件限制,实现此类场景下的实时全彩成像。

在户外运动场景里,越来越多人喜欢夜爬,而深圳几乎每周都会有一、两个人因为夜里爬山走失,此时相关救援队收到消息后会用无人机在山上进行搜救。

黑夜也是罪犯的天然保护伞。近 70% 的犯罪都是在夜间发生,从晚上 7 点到次日凌晨 5 点是犯罪事件的高发期。

除此之外,两万多公里边境的驻防、山岭和沙漠油田作业区的违规监控、长江十年禁渔令下河流常态化监管、电力巡检以及野生动物监测等等,由于光线太弱,传统摄影设备很难在夜间进行探测,必须借助红外摄像头。

在一些国家自然保护区,你可以看到用铁丝捆绑在树干上的红外相机,监测野生动物。它会主动向外发射红外光束(非可见光),照射目标,并将目标反射的红外图像转化成为可见光图像,进行夜间观察。

红外摄像头记录到某自然保护区内夜间出没的豹猫。

这种主动红外夜视系统在全黑情况下也可以进行观察。 不过,由于接收的是物体反射的单一频段的红外光,不包含可见光的绿蓝等基色,无法呈现彩色效果。经处理后,红外成像仅能呈现黑白效果,无法满足需要捕捉更多目标细节,比如颜色、斑纹等的需求。

在城市监控场景下,通过红外补光车牌这类高反光的物体很容易过曝,而衣着颜色、车身颜色、车牌等又往往都是破案的关键线索,丢失不得。

激光夜视仪和红外夜视仪原理差不多,也属于主动传感成像,但观测距离更远,最高可达几公里。除了同样面临信号干扰的问题,模块价格都不便宜,间接提高了构建高质量相机的总系统(可见光+不可见光)成本。

除了上面常见的主动传感成像的手段,还有一种被动红外夜视系统。热像仪会自动收集来自场景中所有物体的不可见热辐射,将热分布数据转换为视频图像,使用也很广泛。

比如,监测输电线需是否有接触不良、漏电、过热或存在树障;搭载在无人机上观测象群、可疑人群、车辆的动向以及油田、海面可疑人物、车辆和船只的监测。

热像仪监测象群移动。热像仪的原理是基于一切温度高于绝对零度的物体时时刻刻都在不停地辐射着红外线。

和可见光成像一样,热成像也属于被动传感成像手段。但成像的结果丢失了很多特征、纹理等信息,看起来就像鬼影。

张齐宁认为,(热成像里)人脸是整体的模糊呈现,很难辨别面部细节(包括眼睛、鼻子甚至皱纹),难以完成高质量的视觉成像。

由于成像质量不高,在目标(比如野火监测)温差都不大的情况下,容易引发误报。另外,因为无法光学变焦,热像仪也不能远距离看清目标,而可见光镜头探测距离要远得多。

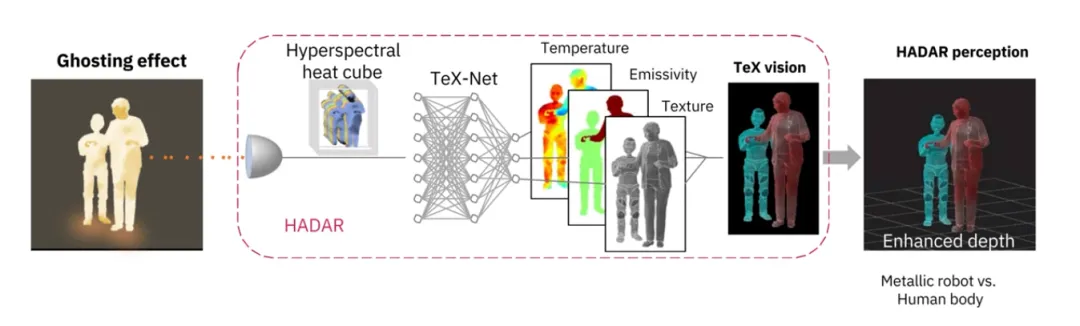

近期 Nature 报道了美国普渡大学和洛斯阿拉莫斯国家实验室的研究人员开发了一种热辅助探测和测距(HADAR)系统,通过训练人工智能(AI)来确定热像中每个像素的温度、能量特征和物理纹理,产生的图像几乎与传统相机在日光下拍摄的图像一样清晰。

那一期的 Nature 以 HADAR 研究作为封面

该研究提出了一种办法HADAR,结合热物理学、红外成像和机器学习,试图恢复目标纹理并克服鬼影效应。

这个技术实际上是一种伪色彩,根据材质预测物体的颜色。张齐宁也注意到了这份研究。「这仿佛蜡笔作画,蜡笔材质本身都一样,但是从颜色上来说又各有各的色彩,其实很难预测这个蜡笔到底是什么颜色。」

站在商用层面,HADAR 技术更不占优势。

数码相机诞生之初只有 28 万像素。后来,人们一直致力于让 CMOS 在很小面积上容纳更多的感光单元,疯狂提升相机分辨率——从 100 万、500 万到上千万、3500 万甚至上亿,成像效果完全可以与传统胶片相机媲美。

现在的手机镜头普遍都在几千万像素,高端红外热像仪才不过上百万像素。为什么?因为核心元件探测器的像元没办法做小:

热成像利用的红外光(8 微米到 14 微米)波长非常长,能量更大,导致探测器上的像元(像素)尺寸要做得非常大。可见光相机的像素只有 1-2 微米,而红外热成像仪的探测器像元每个有 12-17 微米。

在镜头尺寸一样的情况下,热像仪的镜头像素要比可见光镜头的像素少得多,成像效果自然差很多。

热成像仪的探测器像元尺寸越小,像元的数量就越多,分辨率也就越高,视场角也越大,视野更广阔。

热成像的芯片很难做小,即使走量也没办法将成本摊薄到比 CMOS 还便宜。张齐宁认为,热成像技术会在特定细分领域,比如完全无光的场景识别生命迹象,具有明显优势。如果放在其他需要仔细辨别细节的场景,优势相对并不明显。

目前,摄像头在极弱光环境下至今「基本上就停留在黑白时代」,之前也没有特别好的方法去解决彩色成像的问题。张齐宁说,在极弱光下,实现高信噪比质量成像的方法几乎是没有的。

SONY 创始人盛田昭夫在其回忆录中谈到 1960 年代索尼自研特利珑,认为彩电是大势所趋。

但是,人类会为体验感的升级而买单。彩色胶片、彩色电影、彩电对黑白竞对的更替,均是如此。《时代杂志》在 2016 年将日本索尼特丽珑(Trinitron ,彩色显像管)电视,与 iPod、iPhone、Macintosh 、谷歌眼镜一并列入有史以来最有影响力的 50 个电子设备排行榜。

在产品功能方面,录像、存储和夜视功能已成为摄像头的标配。洛图科技 2022 年报数字显示,97% 的摄像头支持夜视功能,并且逐渐向彩色进化,日夜全彩摄像头份额从 1 月的 20% 增长至 12 月的 31%。

摩尔定律还在发挥作用。在未来,当 AI 算力足够便宜,功耗也足够低的时候,我们就能以不高的代价为每一个摄像头更换夜间视觉引擎。张齐宁说。

如果性价比、功耗都跟现在的成像的芯片相当,我们为什么不去用一个全彩夜视的摄像头?

三、另一条路:融合 AI,软化 ISP

我们现在的技术已经可以在极弱光下,真正做一些高质量成像,在黑暗中仔细地分辨人和事物的一些细节。据张齐宁介绍,在很多关键成像任务上,可以做几百倍的提升。

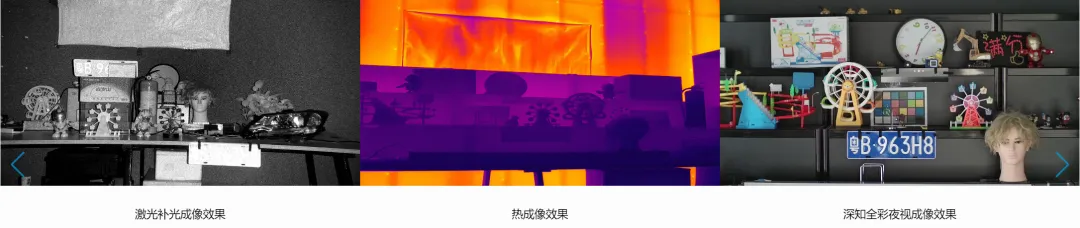

极弱光下,激光方案(左),热成像(中)和深知未来的全彩夜视成像效果(右)对比。

比如,基于传统 ISP 硬件,只能去做 0. 1Lux 的成像,经过我们 AI-ISP 增强之后,可以做到 0.0001Lux 成像。

能看多清楚,跟目标物体大小有关。他解释说,如果监测范围要到 10 公里,极弱光下依旧可以看清很高的大楼、大桥等巨大建筑物。如果需要监测 3-5 公里的范围,基本上就是监测海上的船、地面上的大型车辆等。

如果是要看清一个人,现在光学上能够做到的也就是一两百米。

台风“杜苏芮”过境福建泉州市,强降水引发的城市内涝等次生灾害。福建泉州南安迅捷救援队应用深知未来全彩夜视相机挂载S2搭配无人机进行夜间救援。

2018 年英特尔的一篇 CVPR 论文 Learning to see in the dark 使用了一个模型来拟合整个 ISP 过程,从一个 RAW 数据作为输入,直接输出一个 sRGB 的成片,效果很惊艳。

论文大火,某种程度上,它论证了通过整个神经网络来实现 ISP 全部功能的可能性。特别是在商业角度,它增加了可见光摄像头系统的有用范围,提供了一个以更低成本提供实时日夜全彩清晰图像的可能性。

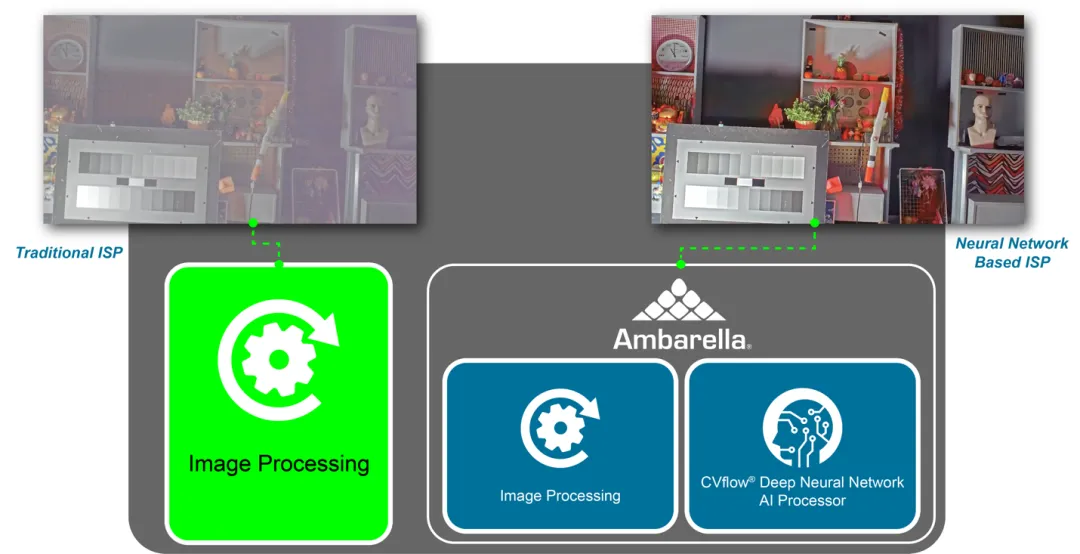

随后,深知未来、安霸、华为海思、爱芯元智、眼擎科技等一些企业开始探索用神经网络搭建视觉成像引擎。

镜头、CMOS 以模拟器件为主,很难把算法加进去,鉴于两个产业已经非常成熟,很难实现原理层面上的突破(除非是材料)。

但是,ISP和算法相关。它会对接收到的电信号做很多处理,会把大量的有用的信息都抹掉,在这个基础上,再试图提升下游的识别效果,已经失去先机。特别是暗光和高动态场景,图像失真和信息损失不可避免。

比如,有的 CMOS 已经达到 160dB,但多数传统 ISP 动态范围还停留在 48dB,犹如高速公路过后接着走一段乡村小路。由于 ISP 这条乡村小路容纳的车流量有限,会对接收到的电信号做一定处理,比如通过去掉最暗部分和最亮部分,扔掉大量信息。

如果一次性端掉整个 ISP 不可靠,考虑到目前端侧的算力也有限,结合落地中的功耗以及成本问题,能不能将其中与成像质量相关的关键环节 AI 化,直接从原始数据(比如感光芯片数据)里提取更多信息,让 AI 来处理? 比如,用一个DNN 做白平衡,另一个 DNN 做 Demosaic,然后让很多个神经网络协同工作?

循着这一思路,2021 年华为海思发布越影 ISP 芯片,被视为推动了整个安防行业的 ISP 转向。越影 AI ISP 能智能区分图像中的信号和噪声,实现低照度场景下的智能降噪。

2022年,在 ISP 处理方面 17 年的经验的安霸公司也宣布推出 AI ISP ,可以在极低的照度和最小的噪声下实现低光下的彩色成像,比主流 ISP 性能提升 10 到 100 倍,并具有更自然的颜色再现和更高的动态范围处理能力。

深知未来也利用神经网络深度学习噪声和信号的分布特性,训练出一套能从极弱光信号中分离噪声和真实信号的深度学习 AI 算法,在降噪的同时将真实信号增强至正常光环境强度,信噪比提升最高可达 25dB,实现了在极弱光环境下的正常成像。

深知未来 AI ISP技术,信噪比提升最高可达 25dB,实现了在极弱光环境下的正常成像。

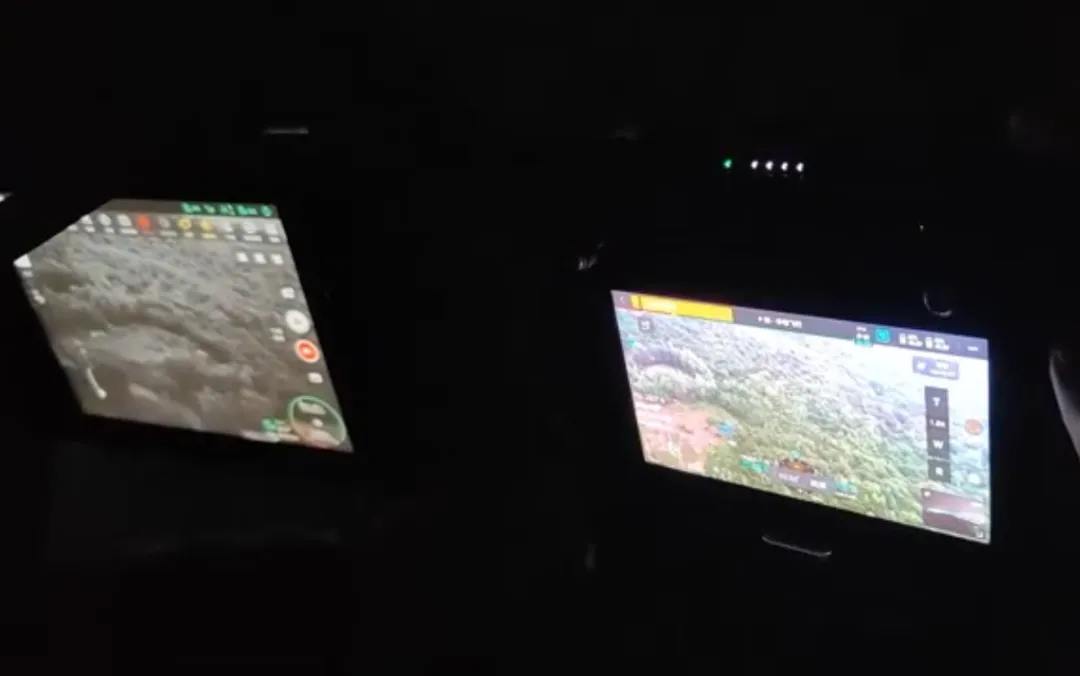

云南昆明消防总队在夜间使用深知未来夜视相机挂载 S3 搭配无人机进行测试,此图为 S3 VS 其他夜视相机载荷对比图。

神经网络强大的地方在于对复杂场景的建模能力,使得图像效果超越传统 ISP,特别是极低照度下的降噪、增强对比度等方面。

「我们所做的一切就是收集足够多的数据,增强建模能力,特别是各种 corner case。」 张齐宁举例道,在深圳生活长大的人可能无法想象西藏高原、冰川等夜晚有多黑。我们从来没有见过那么黑的场景。在整个中国甚至全球范围内,成像还会遇到哪些极端情况?我们的算法能不能覆盖到?

获取这些 Bad case,再针对性在做训练,就能增强应对复杂场景的能力,实时更新 ISP 参数。迭代视觉模型,即可快速实现芯片产品画质的升级换代。

比较之下,由于传统 ISP 在做成像时,必须在 FPGA 或者是 ASIC 上运行,因为它必须有一个非常严格的有时序的硬件来保证它的时延可控,完全固化成了电路逻辑,因此,它们无法有效进行个性化调整,也永远无法升级。

目前,AI 与传统 ISP 的结合趋势在手机厂商中非常突出,目的是增加手机拍照效果,深化品牌差异。另外,AI ISP 也在走入安防、无人机甚至自动驾驶领域。

去年至今,我们最大的一个商业化场景就是行业级无人机的全彩夜视相机挂载。张齐宁告诉我们,无人机市场的商业模式已经被验证,现在已经拥有成熟的系列产品线。

国内行业级无人机的应用其实很广泛,包括公共安全、渔政、边防、海防、消防、应急等等。目前国内 200 家以上的行业级无人机相关企业,主要围绕着农业植保、电力巡检和警用安防三大重点领域。

与此同时,深知未来也在探索夜视相机的消费级市场,如手持夜视望远镜等。

四、迈向 2.0 ,干掉 ISP

现在,我们都还在 AI ISP 1. 0 时代——部分的传统 ISP 流程加上部分神经网络流程——本质上还是一种过渡。在张齐宁看来。

目前的方案不仅要传统 ISP,还需要 NPU,无论是成本还是功率消耗,肯定都比原来的要高,没那么容易实现平替。

芯片寸土寸金,如今还要给 ISP 留一块地,面积还挺大,甚至比 NPU 还要大。有时候,功耗还会超越 NPU。

因为 ISP 和 NPU 需要进行协同工作,双方的一些数据交换就会导致 NPU 没有办法全功率工作,通常可能只有百分之十几、二十的利用率。

不过,ISP 的技术还在不断演进,与 AI 的融合只是其中一个方向。也有观点认为,基于一些自身不足(比如功率、端侧算力、训练推理成本等),AI ISP 也不可能全部取代传统 ISP。

而在张齐宁看来,与 AI 融合的技术路径必须继续进化到一个大家都无脑选择的状态,才能真正实现规模化替换。「 接下来,我们想完全切掉所有传统 ISP 流程,用神经网络替代。」

视觉从诞生的第一天起就是在用神经网络在做成像。三叶虫因此成为寒武纪海洋中的霸主,在地球上生存了近三亿年才灭绝。人的视觉本身就是一个非常纯粹的神经网络。

深知未来预计会在今年年底实现 2. 0 框架的雏形。据张齐宁介绍,这是一个 all in one 的神经网络,不再依赖于任何传统 ISP pipeline。

你可以认为它是一个 multitask 的神经网络,可以实现非常多的任务,不像现在的方案还需要有很多神经网络协作。

「只需要 NPU,它是一个全新物种。」